Today we will tell how to downgrade your website load. This solution will help to protect your website from search and malicious bots.

Search Bots

We will work with robots.txt file in this case.

We need to set up an indexing limitation which means we will allow Google, Bing, Baidu or other bots to index your website in a certain time period.

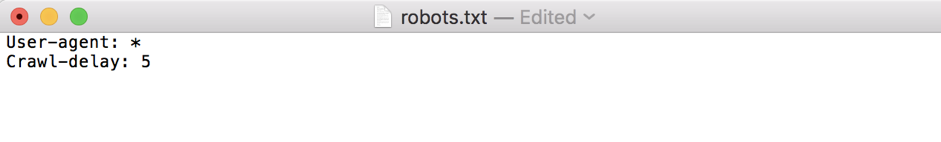

Create robots.txt in a root directory of your website with the following content:

User-agent: * Crawl-delay: 5

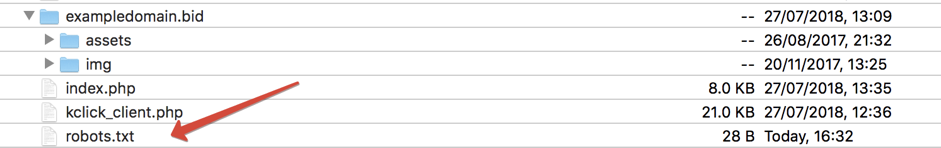

The file should be located in a root directory:

Done! We’ve allowed bots from search engines to index our website once in 5 seconds.

But sometimes bots may ignore a crawl delay, then there’s a solution in webmaster tools https://webmasters.stackexchange.com/a/56773.

Such settings can be found in every search engine.

Malicious Bots: How to Ban them on a Server

First, you need to check if this really is bots who load your website and make it unavailable.

To learn which bots visit your website, check the server’s logs where your website is hosted. Check if there are many requests with the same user agent, e.g. MauiBot, MJ12bot, SEMrushBot, AhrefsBot.

Just google “blocking bad bots” and you’ll find thousands of such bots.

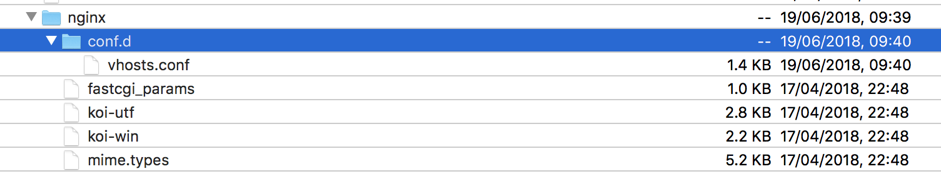

The block of such bots will be done in a Nginx configuration file.

Important! This solution will only work in case the tracker is installed with our auto-installation script.

Add to /etc/nginx/conf.d/vhosts.conf:

to a server section the following code:

if ($http_user_agent ~* (MauiBot|MJ12bot|SEMrushBot|AhrefsBot)){

return 403;

}

Also, if there are SSL certificates, the changes should be done for every domain, e.g. for domain.com, you need to change /etc/nginx/conf.d/domain.com.conf file.

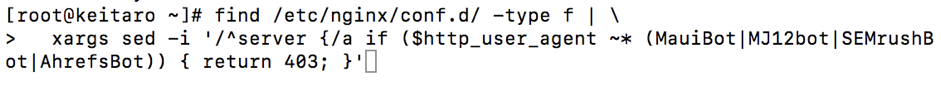

The easiest solution in case you added SSL is to run a command in your server’s terminal:

find /etc/nginx/conf.d/ -type f | \

xargs sed -i '/^server {/a if ($http_user_agent ~* (MauiBot|MJ12bot|SEMrushBot|AhrefsBot)) { return 403; }'

Next, reload Nginx with the command:

systemctl reload nginx

To check that everything is done correctly, run a command:

curl http://localhost -H 'User-Agent: MauiBot' -I

The reply should be:

HTTP/1.1 403 Forbidden

Thus, the bot’s won’t be able to visit the website.

Read More

Knowledge Database

Auto-Installation Tutorial

YouTube Channel